我是一名负载测试工程师,最近一直在从事一个有望积极使用Apache Kafka的项目。由于采用远程工作模式,因此需要数周才能访问测试环境。为了不浪费时间,我决定在Kubernetes部署一个本地站点。

那些使用过Apache Kafka的人将确认,官方文档并未涵盖安装和配置的所有详细信息。我希望这份循序渐进的指南将帮助您减少部署测试环境所需的时间。我提请您注意以下事实:在容器中安装有状态的方法远非最佳主意,因此此说明不适用于部署工业用支架。

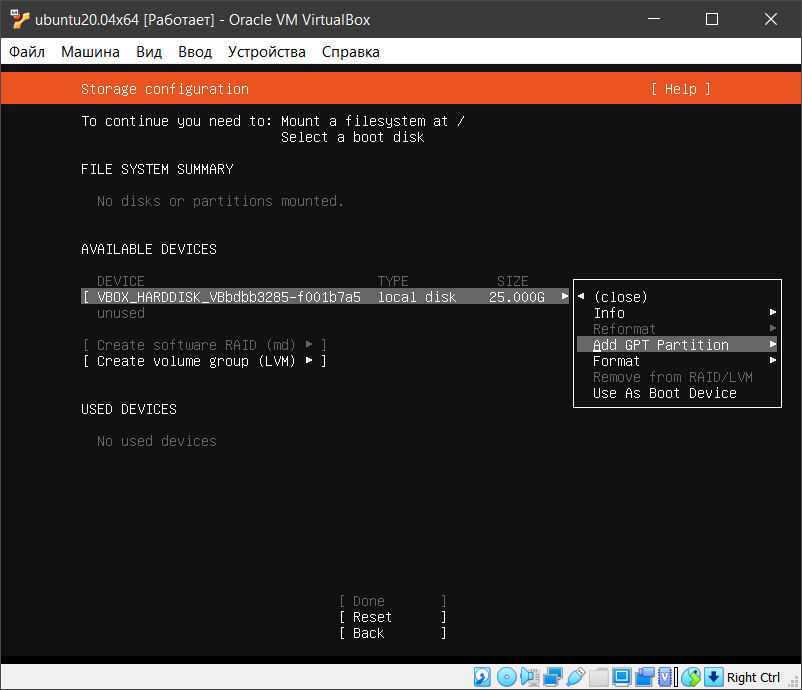

这些说明描述了如何在VirtualBox中创建虚拟机,安装和配置操作系统,安装Docker,Kubernetes和监视系统。Kubernetes上部署了两个Apache Kafka集群:“生产”和“备份”。MirrorMaker 2.0用于将消息从生产复制到备份。生产群集的节点之间的通信受TLS保护。不幸的是,我无法上载脚本来在Git中生成证书。例如,您可以使用来自certs / certs.tar.gz存档的证书。在本教程的最后,我们描述了如何部署Jmeter集群并运行测试脚本。

资源可在资源库中找到:github.com/kildibaev/k8s-kafka

这些说明是为Kubernetes中的初学者设计的,因此,如果您已经有使用容器的经验,则可以直接转到“ 12.部署Apache Kafka集群”一节。

问答:

- 为什么要使用Ubuntu?我最初将Kubernetes部署在CentOS 7上,但是在其中一项更新之后,环境停止工作。此外,我注意到在CentOS上,在Jmeter中运行的负载测试的行为异常。如果遇到,请在评论中写出解决此问题的方法。在Ubuntu中,一切都更加稳定。

- 为什么不使用k3s或MicroK8s?简而言之,开箱即用的k3和MicroK8都不知道如何使用本地Docker存储库。

- ? .

- Flannel? kubernetes Flannel — , .

- Docker, CRI-O? CRI-O .

- MirrorMaker 2.0 Kafka Connect? Kafka Connect MirrorMaker 2.0 " " REST API.

11. Prometheus, Grafana, Alert Manager Node Exporter

14.1. MirrorMaker 2.0 Kafka Connect

1.

2 6-8 . , Rancher K3S.

2. Ubuntu Server 20.04

, . :

- IP , ( 10.0.2.15);

- Kubernetes , swap , ;

- "Install OpenSSH server".

3. Ubuntu

3.1. Firewall

sudo ufw disable3.2. Swap

sudo swapoff -a

sudo sed -i 's/^\/swap/#\/swap/' /etc/fstab3.3. OpenJDK

OpenJDK keytool, :

sudo apt install openjdk-8-jdk-headless3.4. ()

DigitalOcean:

https://www.digitalocean.com/community/tutorials/how-to-set-up-ssh-keys-on-ubuntu-20-04-ru

4. Docker []

# Switch to the root user

sudo su

# (Install Docker CE)

## Set up the repository:

### Install packages to allow apt to use a repository over HTTPS

apt-get update && apt-get install -y \

apt-transport-https ca-certificates curl software-properties-common gnupg2

# Add Docker’s official GPG key:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add -

# Add the Docker apt repository:

add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"

# Install Docker CE

apt-get update && apt-get install -y \

containerd.io=1.2.13-2 \

docker-ce=5:19.03.11~3-0~ubuntu-$(lsb_release -cs) \

docker-ce-cli=5:19.03.11~3-0~ubuntu-$(lsb_release -cs)

# Set up the Docker daemon

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

mkdir -p /etc/systemd/system/docker.service.d

# Restart Docker

systemctl daemon-reload

systemctl restart docker

# If you want the docker service to start on boot, run the following command:

sudo systemctl enable docker5. iptables []

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system6. kubeadm, kubelet kubectl []

sudo apt-get update && sudo apt-get install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl7. Kubernetes

control-plane: []

# Pulling images required for setting up a Kubernetes cluster

# This might take a minute or two, depending on the speed of your internet connection

sudo kubeadm config images pull

# Initialize a Kubernetes control-plane node

sudo kubeadm init --pod-network-cidr=10.244.0.0/16( root): []

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config8. Flannel []

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml9. pod- control-plane []

Kubernetes standalone, pod- control-plane:

kubectl taint nodes --all node-role.kubernetes.io/master-10. kubectl []

alias k='kubectl'

echo "alias k='kubectl'" >> ~/.bashrc11. Prometheus, Grafana, Alert Manager Node Exporter

kube-prometheus: []

curl -O -L https://github.com/coreos/kube-prometheus/archive/master.zip

sudo apt install -y unzip

unzip master.zip

cd kube-prometheus-master

kubectl create -f manifests/setup

kubectl create -f manifests/, pod-. pod- Running:

kubectl get pods -w -n monitoringKafka Zookeeper JMX Exporter. Prometheus ServiceMonitor:

k apply -f https://raw.githubusercontent.com/kildibaev/k8s-kafka/master/servicemonitor/jmx-exporter-servicemonitor.yaml, - Grafana :

kubectl apply -f https://raw.githubusercontent.com/kildibaev/k8s-kafka/master/service/grafana-svc.yamlGrafana http://localhost:32000

, Grafana :

- Grafana http://127.0.0.1:3000

Grafana . http://127.0.0.1:3000/dashboard/import "Import via panel json" grafana-dashboard.json

12. Apache Kafka

#

git clone https://github.com/kildibaev/k8s-kafka.git $HOME/k8s-kafka

cd $HOME/k8s-kafka12.1. Apache Zookeeper

Statefulset. Apache Zookeeper : zookeeper-0.zookeeper, zookeeper-1.zookeeper zookeeper-2.zookeeper

# zookeeper-base

sudo docker build -t zookeeper-base:local-v1 -f dockerfile/zookeeper-base.dockerfile .

# Zookeeper

k apply -f service/zookeeper-svc.yaml

# Apache Zookeeper

k apply -f statefulset/zookeeper-statefulset.yaml

# pod- Running. pod- :

k get pods -w12.2. Apache Kafka

Apache Kafka : kafka-0.kafka kafka-1.kafka

# kafka-base

sudo docker build -t kafka-base:local-v1 -f dockerfile/kafka-base.dockerfile .

# Kafka

k apply -f service/kafka-svc.yaml

# Apache Kafka

k apply -f statefulset/kafka-statefulset.yaml

# pod- Running. pod- :

k get pods -w13.

13.1.

, 10 . 100 . 30000 .

# pod - producer

k run --rm -i --tty producer --image=kafka-base:local-v1 -- bash

# topicname

bin/kafka-producer-perf-test.sh \

--topic topicname \

--num-records 30000 \

--record-size 100 \

--throughput 10 \

--producer.config /config/client.properties \

--producer-props acks=1 \

bootstrap.servers=kafka-0.kafka:9092,kafka-1.kafka:9092 \

buffer.memory=33554432 \

batch.size=819613.2.

# pod - consumer

k run --rm -i --tty consumer --image=kafka-base:local-v1 -- bash

# topicname

bin/kafka-consumer-perf-test.sh \

--broker-list kafka-0.kafka:9092,kafka-1.kafka:9092 \

--consumer.config /config/client.properties \

--messages 30000 \

--topic topicname \

--threads 214. MirrorMaker 2.0

14.1. MirrorMaker 2.0 Kafka Connect

Apache Kafka, , production. pod, : Apache Zookeeper, Apache Kafka Kafka Connect. Apache Kafka backup production backup.

k apply -f service/mirrormaker-svc.yaml

# pod, : Apache Zookeeper, Apache Kafka Kafka Connect

k apply -f statefulset/mirrormaker-statefulset.yaml

# pod mirrormaker-0 Running

k get pods -w

# connect pod- mirrormaker-0

k exec -ti mirrormaker-0 -c connect -- bash

# Kafka Connect MirrorMaker 2.0

curl -X POST -H "Content-Type: application/json" mirrormaker-0.mirrormaker:8083/connectors -d \

'{

"name": "MirrorSourceConnector",

"config": {

"connector.class": "org.apache.kafka.connect.mirror.MirrorSourceConnector",

"source.cluster.alias": "production",

"target.cluster.alias": "backup",

"source.cluster.bootstrap.servers": "kafka-0.kafka:9092,kafka-1.kafka:9092",

"source.cluster.group.id": "mirror_maker_consumer",

"source.cluster.enable.auto.commit": "true",

"source.cluster.auto.commit.interval.ms": "1000",

"source.cluster.session.timeout.ms": "30000",

"source.cluster.security.protocol": "SSL",

"source.cluster.ssl.truststore.location": "/certs/kafkaCA-trusted.jks",

"source.cluster.ssl.truststore.password": "kafkapilot",

"source.cluster.ssl.truststore.type": "JKS",

"source.cluster.ssl.keystore.location": "/certs/kafka-consumer.jks",

"source.cluster.ssl.keystore.password": "kafkapilot",

"source.cluster.ssl.keystore.type": "JKS",

"target.cluster.bootstrap.servers": "localhost:9092",

"target.cluster.compression.type": "none",

"topics": ".*",

"rotate.interval.ms": "1000",

"key.converter.class": "org.apache.kafka.connect.converters.ByteArrayConverter",

"value.converter.class": "org.apache.kafka.connect.converters.ByteArrayConverter"

}

}'14.2.

, backup production.topicname. ".production" MirrorMaker 2.0 , , , active-active.

# pod - consumer

k exec -ti mirrormaker-0 -c kafka -- bash

#

bin/kafka-topics.sh --list --bootstrap-server mirrormaker-0.mirrormaker:9092

# production.topicname

bin/kafka-console-consumer.sh \

--bootstrap-server mirrormaker-0.mirrormaker:9092 \

--topic production.topicname \

--from-beginningproduction.topicname , , Kafka Connect:

k logs mirrormaker-0 connect

ERROR WorkerSourceTask{id=MirrorSourceConnector-0} Failed to flush, timed out while waiting for producer to flush outstanding 1 messages (org.apache.kafka.connect.runtime.WorkerSourceTask:438)

ERROR WorkerSourceTask{id=MirrorSourceConnector-0} Failed to commit offsets (org.apache.kafka.connect.runtime.SourceTaskOffsetCommitter:114)producer.buffer.memory:

k exec -ti mirrormaker-0 -c connect -- bash

curl -X PUT -H "Content-Type: application/json" mirrormaker-0.mirrormaker:8083/connectors/MirrorSourceConnector/config -d \

'{

"connector.class": "org.apache.kafka.connect.mirror.MirrorSourceConnector",

"source.cluster.alias": "production",

"target.cluster.alias": "backup",

"source.cluster.bootstrap.servers": "kafka-0.kafka:9092,kafka-1.kafka:9092",

"source.cluster.group.id": "mirror_maker_consumer",

"source.cluster.enable.auto.commit": "true",

"source.cluster.auto.commit.interval.ms": "1000",

"source.cluster.session.timeout.ms": "30000",

"source.cluster.security.protocol": "SSL",

"source.cluster.ssl.truststore.location": "/certs/kafkaCA-trusted.jks",

"source.cluster.ssl.truststore.password": "kafkapilot",

"source.cluster.ssl.truststore.type": "JKS",

"source.cluster.ssl.keystore.location": "/certs/kafka-consumer.jks",

"source.cluster.ssl.keystore.password": "kafkapilot",

"source.cluster.ssl.keystore.type": "JKS",

"target.cluster.bootstrap.servers": "localhost:9092",

"target.cluster.compression.type": "none",

"topics": ".*",

"rotate.interval.ms": "1000",

"producer.buffer.memory:" "1000",

"key.converter.class": "org.apache.kafka.connect.converters.ByteArrayConverter",

"value.converter.class": "org.apache.kafka.connect.converters.ByteArrayConverter"

}'15. Jmeter

# jmeter-base

sudo docker build -t jmeter-base:local-v1 -f dockerfile/jmeter-base.dockerfile .

# Jmeter

k apply -f service/jmeter-svc.yaml

# pod- Jmeter,

k apply -f statefulset/jmeter-statefulset.yamlpod jmeter-producer producer.jmx

k run --rm -i --tty jmeter-producer --image=jmeter-base:local-v1 -- bash ./jmeter -n -t /tests/producer.jmx -r -Jremote_hosts=jmeter-0.jmeter:1099,jmeter-1.jmeter:1099pod jmeter-consumer consumer.jmx

k run --rm -i --tty jmeter-consumer --image=jmeter-base:local-v1 -- bash ./jmeter -n -t /tests/consumer.jmx -r -Jremote_hosts=jmeter-2.jmeter:1099,jmeter-3.jmeter:109916.

statefulset

k delete statefulset jmeter zookeeper kafka mirrormakersudo docker rmi -f zookeeper-base:local-v1 kafka-base:local-v1 jmeter-base:local-v1k delete svc grafana jmeter kafka mirrormaker zookeeper

k delete servicemonitor jmxexporter