哈Ha!如您所知,数据集是机器学习的动力。诸如Kaggle,ImageNet,Google Dataset Search和Visual Genom之类的网站是人们通常使用并从人们那里听到的数据集的来源,但是我很少看到使用诸如Bing Image Search之类的网站来查找数据的人。和Instagram。因此,在本文中,我将向您展示通过用Python编写两个小程序从这些来源获取数据有多么容易。

必应图片搜索

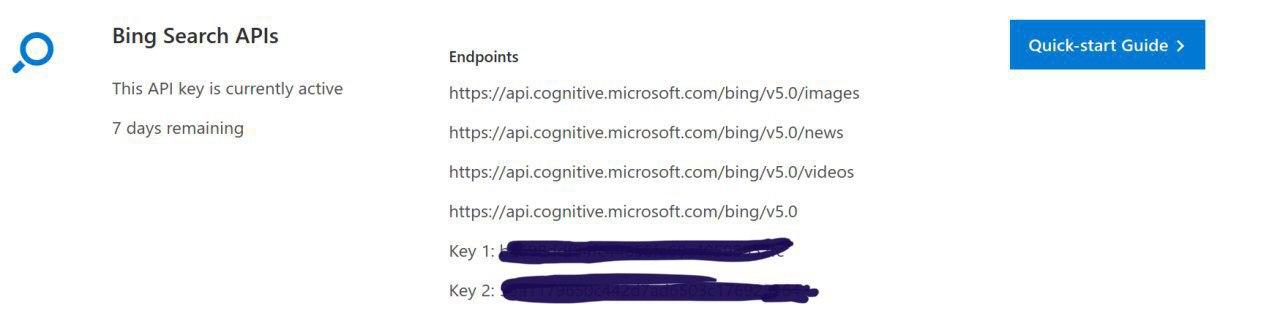

首先要做的是点击链接,单击“获取API密钥”按钮,然后使用任何建议的社交网络(Microsoft,Facebook,LinkedIn或GitHub)进行注册。注册过程完成后,您将被重定向到“您的API”页面,该页面应如下所示(被掩盖的是您的API密钥):

让我们继续编写代码。我们导入所需的库:

from requests import exceptions

import requests

import cv2

import os接下来,您需要指定一些参数:API密钥(您需要选择两个建议的密钥之一),指定搜索条件,每个请求的最大图像数量以及最终URL:

subscription_key = "YOUR_API_KEY"

search_terms = ['girl', 'man']

number_of_images_per_request = 100

search_url = "https://api.cognitive.microsoft.com/bing/v7.0/images/search"现在,我们将编写三个小功能:

1)为每个搜索词创建一个单独的文件夹:

def create_folder(name_folder):

path = os.path.join(name_folder)

if not os.path.exists(path):

os.makedirs(path)

print('------------------------------')

print("create folder with path {0}".format(path))

print('------------------------------')

else:

print('------------------------------')

print("folder exists {0}".format(path))

print('------------------------------')

return path

2)以JSON返回服务器响应的内容:

def get_results():

search = requests.get(search_url, headers=headers,

params=params)

search.raise_for_status()

return search.json()3) :

def write_image(photo):

r = requests.get(v["contentUrl"], timeout=25)

f = open(photo, "wb")

f.write(r.content)

f.close():

for category in search_terms:

folder = create_folder(category)

headers = {"Ocp-Apim-Subscription-Key": subscription_key}

params = {"q": category, "offset": 0,

"count": number_of_images_per_request}

results = get_results()

total = 0

for offset in range(0, results["totalEstimatedMatches"],

number_of_images_per_request):

params["offset"] = offset

results = get_results()

for v in results["value"]:

try:

ext = v["contentUrl"][v["contentUrl"].

rfind("."):]

photo = os.path.join(category, "{}{}".

format('{}'.format(category)

+ str(total).zfill(6), ext))

write_image(photo)

print("saving: {}".format(photo))

image = cv2.imread(photo)

if image is None:

print("deleting: {}".format(photo))

os.remove(photo)

continue

total += 1

except Exception as e:

if type(e) in EXCEPTIONS:

continue:

from selenium import webdriver

from time import sleep

import pyautogui

from bs4 import BeautifulSoup

import requests

import shutil, selenium, geckodriver. , , #bird. 26 . , geckodriver, , :

browser=webdriver.Firefox(executable_path='/path/to/geckodriver')

browser.get('https://www.instagram.com/explore/tags/bird/') 6 , :

1) . login.send_keys(' ') password.send_keys(' ') :

def enter_in_account():

button_enter = browser.find_element_by_xpath("//*[@class='sqdOP L3NKy y3zKF ']")

button_enter.click()

sleep(2)

login = browser.find_element_by_xpath("//*[@class='_2hvTZ pexuQ zyHYP']")

login.send_keys('')

sleep(1)

password = browser.find_element_by_xpath("//*[@class='_2hvTZ pexuQ zyHYP']")

password.send_keys('')

enter = browser.find_element_by_xpath(

"//*[@class=' Igw0E IwRSH eGOV_ _4EzTm ']")

enter.click()

sleep(4)

not_now_button = browser.find_element_by_xpath("//*[@class='sqdOP yWX7d y3zKF ']")

not_now_button.click()

sleep(2)2) :

def find_first_post():

sleep(3)

pyautogui.moveTo(450, 800, duration=0.5)

pyautogui.click(), , , - , , , moveTo() .

3) :

def get_url():

sleep(0.5)

pyautogui.moveTo(1740, 640, duration=0.5)

pyautogui.click()

return browser.current_url, : .

4) html- :

def get_html(url):

r = requests.get(url)

return r.text5) URL :

def get_src(html):

soup = BeautifulSoup(html, 'lxml')

src = soup.find('meta', property="og:image")

return src['content']6) . filename :

def download_image(image_name, image_url):

filename = 'bird/bird{}.jpg'.format(image_name)

r = requests.get(image_url, stream=True)

if r.status_code == 200:

r.raw.decode_content = True

with open(filename, 'wb') as f:

shutil.copyfileobj(r.raw, f)

print('Image sucessfully Downloaded')

else:

print('Image Couldn\'t be retreived') . , , , , . , pyautogui, , , . , , .

, Ubuntu 18.04. GitHub.